Inside the Stack: How AI Hardware Devices Are Built and Launched

At Engineering Lab, we design and build intelligent products from concept to production. With the rapid rise of generative AI and voice-driven systems, we’re now helping startups and innovators bring AI-powered devices into the real world. This is hardware that doesn’t just sense, but listens, understands, and responds.

If you're considering launching a voice-enabled or LLM-integrated device, here's a look at what it takes, and how we can help you do it faster and smarter.

AI technologies aren’t the black box they once were. Today, we can reach into that mystery, harness powerful machine learning models, and build them directly into our own products, turning complexity into something we can hold, shape, and use.

A pile of resistors, capacitors, and connectors in their bags is nothing special on its own. But when brought together with intention and intelligence, they become part of something far greater. Like AI, the value isn't in the parts, it's in how they work together.

The Core Architecture of an AI Device

Building an AI hardware product goes beyond soldering a microphone onto a PCB. There’s a whole pipeline that must run smoothly, securely, and efficiently:

Audio Input

High-quality microphones (often MEMS) pick up sound. Proper analog front ends and ADCs ensure clean signal capture.On-Device Processing

Microcontrollers or Microprocessors handle:Noise reduction & beamforming

Voice activity detection

Local wake-word processing

Audio to Text

The captured audio is either:Sent to the cloud for speech-to-text (e.g., Whisper, Google, Chirp), or

Converted locally, if real-time response or privacy is critical.

Compression & Transfer

Efficient encoding (e.g. FLAC or Opus) and message size optimisation are key—especially over Wi-Fi or LTE.Querying the LLM

Once the text is prepared, it's sent to a cloud-based language model (like OpenAI or open-source equivalents).

Expect:50–300 tokens per request (depending on user input and prompt size)

Cost estimates: around €0.001–€0.01 per call, depending on LLM provider and prompt structure

Response & Action

The response text is either:Played back as speech (TTS) on the device

Used to trigger an action

Displayed in an app interface

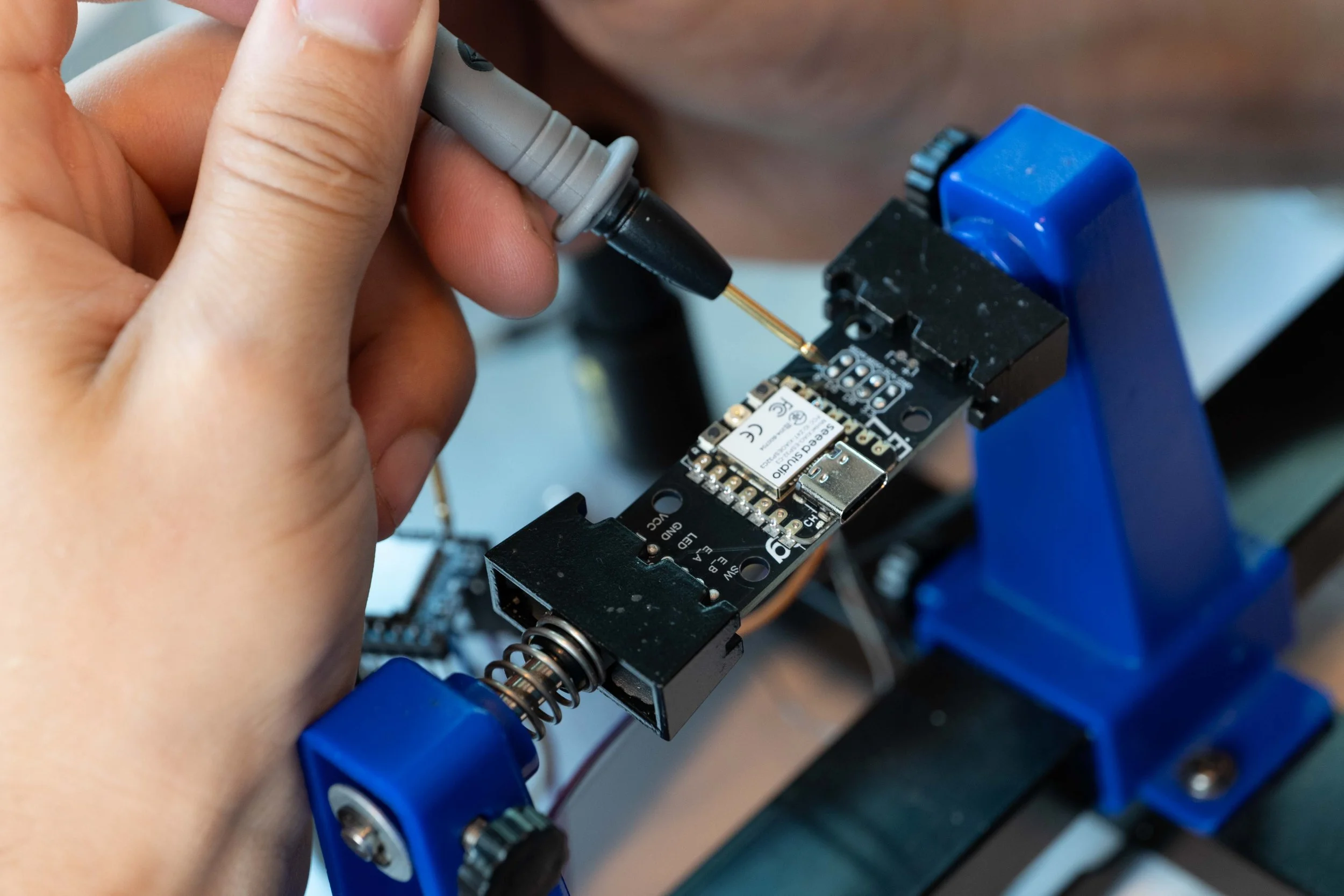

A technician uses a multimeter to check continuity on a newly soldered circuit board — verifying signal integrity and connection reliability.

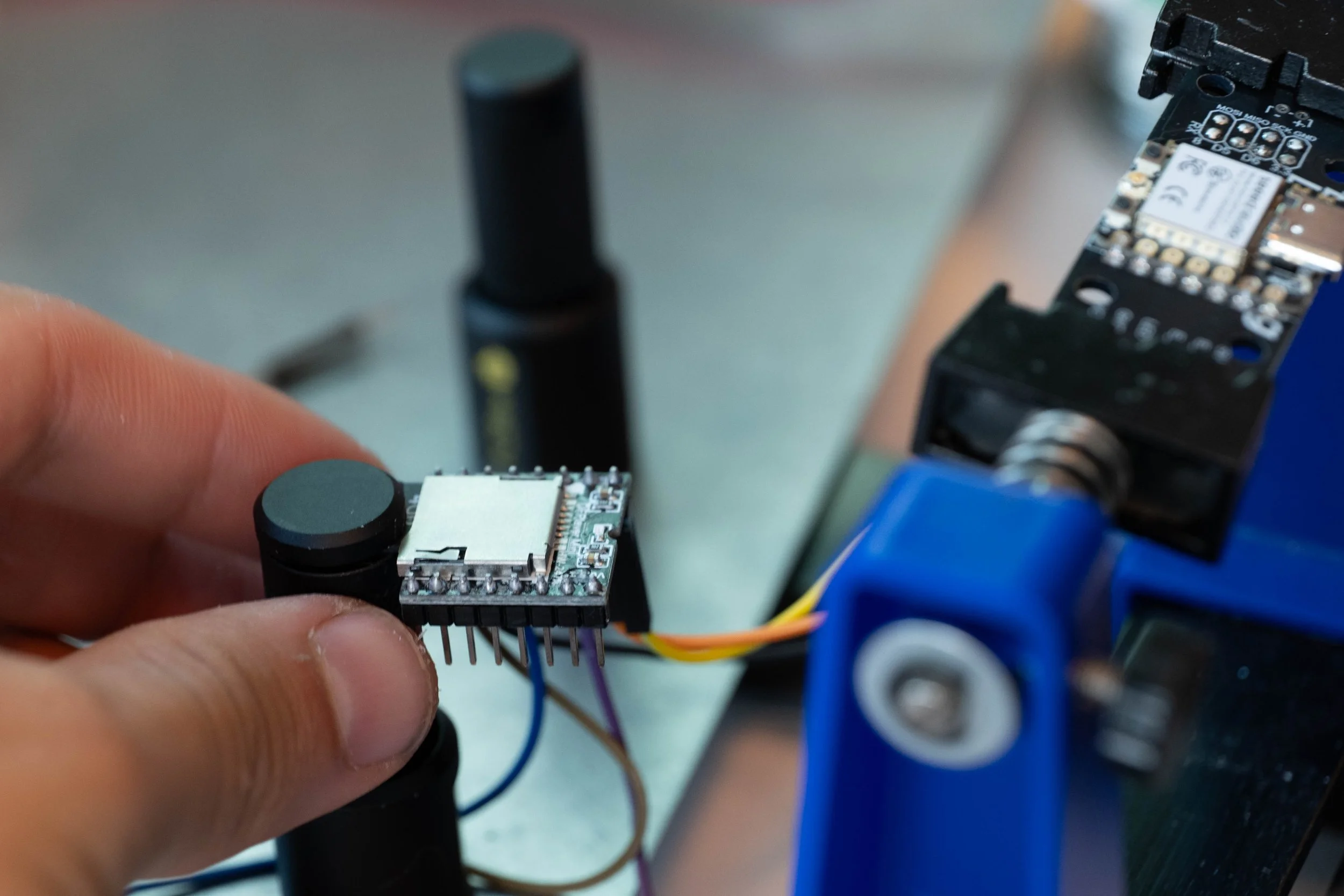

Connecting multiple circuit boards with wiring in preparation for testing — a critical step in enabling reliable signal and power flow for LLM integration in hardware systems.

Device Design: Our Approach

We specialise in making AI devices not just smart, but beautifully integrated. That includes:

Custom PCBs with low-power audio front ends, Wi-Fi/BLE modules, and secure boot firmware

Form factor design that considers speaker layout, mic isolation, and thermal management

Enclosure design that is ergonomic, visually appealing, and fit for purpose, with prototyping options such as CNC machining, SLS printing, and cast urethane for high-quality pre-production runs

Seamless integration of audio, touch, gesture, or even camera input if needed

Human Interface Matters

AI should feel natural, we make sure the key bases are covered and then dial in the design, tweaking and improving until we have a device which operates perfectly and is a pleasure to interact with.

We prototype and validate:

Voice interaction flows (what happens when it mishears?)

Minimalist buttons or capacitive sensors

LED or haptic feedback for silent cues

Mobile app connectivity for setup, logging, or fallback input

Our goal: make it feel like an assistant, not a device.

Battery Life & Connectivity

We help balance power budgets by selecting the right mix of:

Low-power MCUs (e.g., ESP32, nRF52, RP2040)

Efficient wake-on-sound architectures

Sleep states and conditional cloud calls to save energy and cost

Typical runtime for always-on voice devices:

4–20 hours on lithium-poly cells, depending on usage pattern

Recharge via USB-C, Qi wireless, or dock

A reel of MEMS microphones, packaged and ready for automated placement. These components are relatively large compared to the ultra-compact parts we typically mount on our densely packed boards.

A microplacer accurately selects and positions miniature components onto a solder-pasted circuit board. Once placed, the board moves through a reflow oven, where heat secures the components in place for reliable electrical and mechanical connections.

App & Cloud Stack

We support or integrate:

Mobile apps (Flutter or native) for onboarding and history

Backend services to route ASR/LLM traffic (Node.js, Python, Firebase, etc.)

Secure OTA updates and real-time metrics tracking

Let’s Build Your AI Product

Whether you're prototyping a voice assistant, wearable, embedded AI tool, or something entirely new - Engineering Lab can help you:

Validate your AI hardware concept

We help you choose the right architecture, components, and integrations—from LLM APIs to microphone arrays.Prototype fast, iterate smarter

Get working prototypes in-hand quickly, with fully integrated hardware and firmware tailored to your use case.Test cost, performance, and scalability

We run feasibility checks early so you avoid surprises later—real-world power use, API call costs, latency, and more.Deliver polished, pitch-ready prototypes

Functional, refined, and presentation-ready units for user testing, investor demos, or early customer trials.Transition to production with confidence

We prepare your design for manufacturing—finalised electronics, tested enclosures, and clean handoff to scale.

AI in hardware is no longer science fiction—it’s a product category. Let’s build it right.

Ready to build your AI hardware product?

Let us help you turn your idea into something real. Whether you're at concept stage or ready to prototype, we can support you every step of the way - and, as is typical for this kind of work, we operate under NDA to protect your intellectual property throughout the process.

We’ve done this before — now let’s do it for you.

Click below to start the conversation